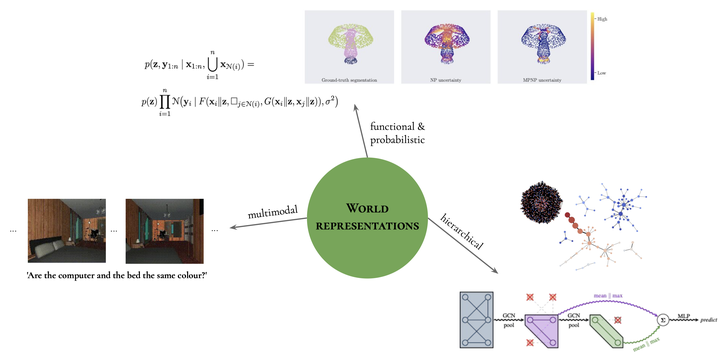

An illustration of the three aspects explored in this thesis that can benefit world representations.

An illustration of the three aspects explored in this thesis that can benefit world representations.Abstract

This thesis presents three research works that study and develop likely aspects of future intelligent agents. The first contribution centers on vision-and-language learning, introducing a challenging embodied task that shifts the focus of an existing one to the visual reasoning problem. By extending popular visual question answering (VQA) paradigms, I also designed several models that were evaluated on the novel dataset. This produced initial performance estimates for environment understanding, through the lens of a more challenging VQA downstream task. The second work presents two ways of obtaining hierarchical representations of graph-structured data. These methods either scaled to much larger graphs than the ones processed by the best-performing method at the time, or incorporated theoretical properties via the use of topological data analysis algorithms. Both approaches competed with contemporary state-of-the-art graph classification methods, even outside social domains in the second case, where the inductive bias was PageRank-driven. Finally, the third contribution delves further into relational learning, presenting a probabilistic treatment of graph representations in complex settings such as few-shot, multi-task learning and scarce-labelled data regimes. By adding relational inductive biases to neural processes, the resulting framework can model an entire distribution of functions which generate datasets with structure. This yielded significant performance gains, especially in the aforementioned complex scenarios, with semantically-accurate uncertainty estimates that drastically improved over the neural process baseline. This type of framework may eventually contribute to developing lifelong-learning systems, due to its ability to adapt to novel tasks and distributions. (Full abstract on the thesis webpage)